Disclaimer, I’m no ML engineer, just a curious person :) Feel free to add/correct any information!

The idea of this post is to give a little overview about LLMs and its tooling in simple terms, as well as the hype around it. In the end we will create a simple AI agent to show how we can power real life solutions with LLM.

What are LLMs?

You might have heard about LLM (Large Language Magic✨ Models), they are AI models that are trained in a huge datasets using Deep learning techniques. This way, they are trained to perform in a big range of applications being capable to reason the text input, search in a infinite range of data and predict what would be the best response. Also, if needed, reason about complex data to provide the answer.

Ok but and the hype about it?

Lately we have been seeing lately many companies becoming suddenly “AI-first” or “AI driven”, this followed by innumerous AI SaaS companies and AI rockstars like Sam Altman. Also if you go to Product Hunt page, website that showcases releases of products, lately all of the products/companies are AI driven or its build upon AI capabilities.

News about Cisco having efficient measures towards focusing on AI and Cybersecurity

Its the AI train! Either you hop in, or you’re behind ¯\_(ツ)/¯ but why is it?

One big advantage of LLMs/“AI” solutions is that they leverage a tool that we are very used to do stuff: Talk.

Due to the capabilities of AI to understand natural language, we can achieve results that before was restricted only for tech experienced people, by just talking to a machine. This way, we can ask/question the machine and it produces magically✨ the result. This makes access to systems interactions and sometimes data analysis more accessible to everyone.

We had things in the past that mimic this like the Brazen Head

but was played by a person, and now we have the chance to have a real machine doing it. R.I.P. Akinator

Using “AI” and LLMs

Nowadays we are getting to use Chat GPT

and other LLMs to help in daily tasks and get answers fast. And one important thing I’ve learned about LLMs is that we have Models and Tools to enhance models, this way when you ask:

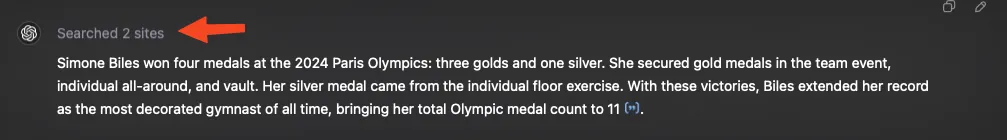

Tell me how many medals Simone Biles got in the 2024 Paris Olympics

You get:

Simone Biles won four medals at the 2024 Paris Olympics: three golds and one silver. She secured gold medals in the team event, individual all-around, and vault. Her silver medal came from the individual floor exercise. With these victories, Biles extended her record as the most decorated gymnast of all time, bringing her total Olympic medal count to 11

And we can notice something in the response

So the model searched 2 websites, thats awesome!! So…Not exactly that…

One thing that at first for me at first was magical, but now I understood. Is that models like model gpt4o

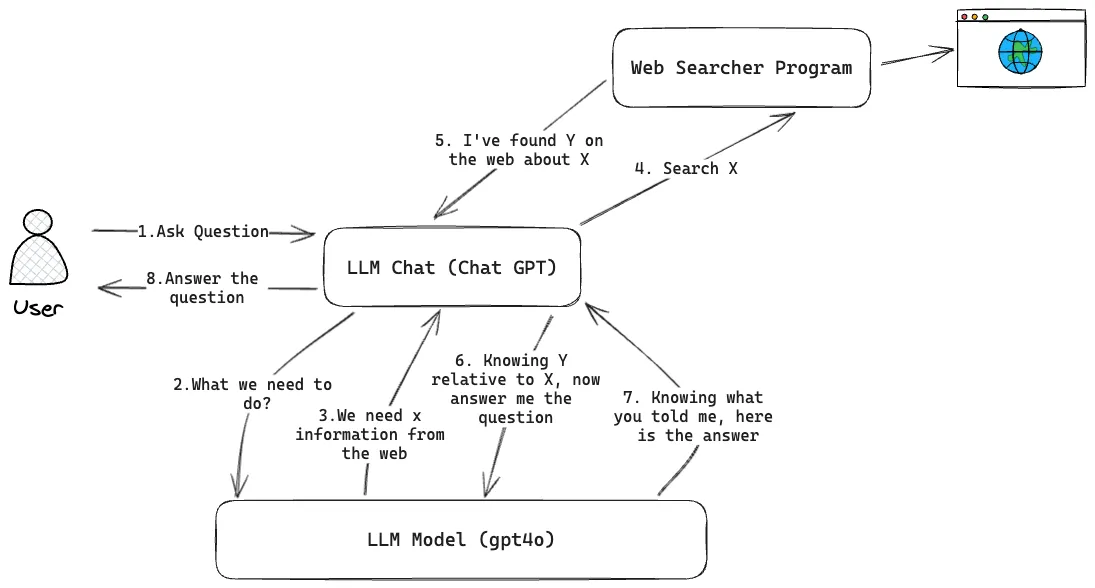

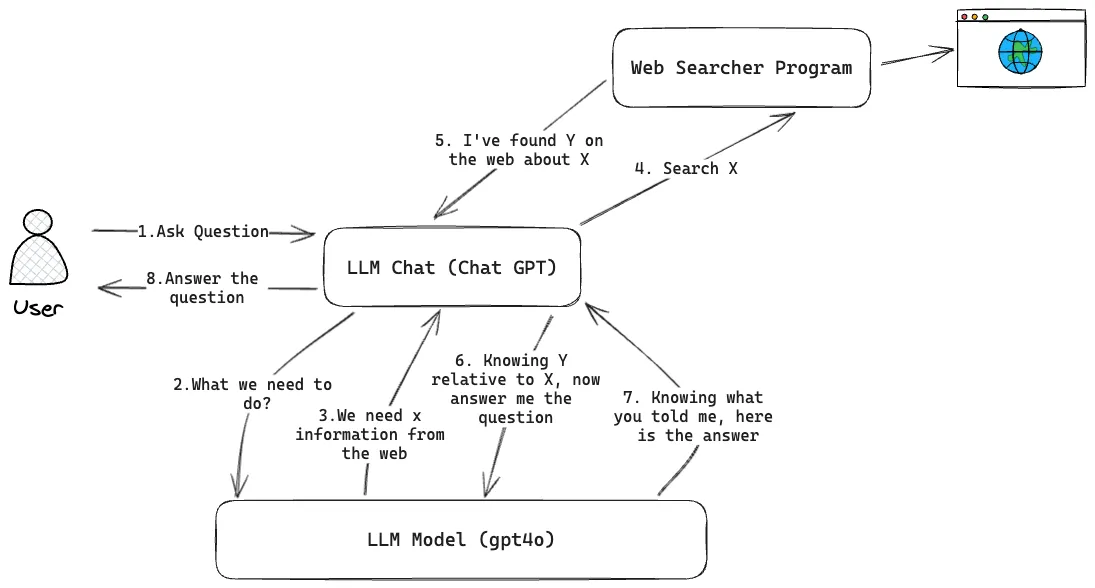

doesn’t know nothing about the 2024 olympic games, since it was trained with data up to Up to Oct 2023. So in order for it to know we must search the web to do it, and for that we use AI Agents ✨🤖 to find the information to provide the LLM. Here is a simplified diagram of the flow

This is one example of the techniques of Prompt Engineering

leveraging RAG

and ReAct. We can define Prompt engineering as the follow:

Prompt engineering skills help to better understand the capabilities and limitations of LLMs. Researchers use prompt engineering to improve safety and the capacity of LLMs on a wide range of common and complex tasks such as question answering and arithmetic reasoning. Developers use prompt engineering to design robust and effective prompting techniques that interface with LLMs and other tools.

But enough about concepts lets build one!

Demo time! Lets create a simple AI Agent

Code here

Jupyter Notebook in Colab

So lets see how we can create your own LLM interactions.

Setup of tools

First of all we need to create some credentials in some tools needed, besides OpenAI all of them have generous free tiers and Open AI you can use credits to avoid skyrocket bills.

- Open AI

- Video about adding Credits to OpenAI

- Open AI platform Dashboard

- Credit Card required

- Video about adding Credits to OpenAI

- Tavily

- No Credit Card required

- 1,000 API calls (Monthly)

- API

- LangSmith

- Video About LangSmith

- No Credit Card required

- Video About LangSmith

Installing dependencies

With the accounts setup we can start the notebook. We gonna be using a Python Notebook to run this code. I suggest using Google Colab

that is a free python notebook on the web.

!pip install langchain -qqq

!pip install langchain-openai -qqq

!pip install langchain_community -qqq

!pip install tavily-python -qqq

After installing the dependencies we can setup the API keys for this demo

import getpass

import os

# Recommend using LangSmith as it provides LOTS of Tracing capabilities!

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["LANGCHAIN_API_KEY"] = getpass.getpass(prompt='LangChain API KEY: ')

os.environ["LANGCHAIN_PROJECT"] = getpass.getpass(prompt='LangChain Project: ')

os.environ["OPENAI_API_KEY"] = getpass.getpass(prompt='Open AI API Key: ')

os.environ["TAVILY_API_KEY"] = getpass.getpass(prompt='TAVILY API Key: ')

You gonna be prompted to add your credentials to load it into the application.

Configuring Open AI

With the credentials setup we can configure our model gpt4o-mini from OpenAI

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage, AIMessage

llm = ChatOpenAI(model="gpt-4o-mini")

And we can test to see how it goes

# Test the OpenAPI model

llm.invoke(input="Hi")

# Response =>

AIMessage(content='Hello! How can I assist you today?', additional_kwargs={'refusal': None}, response_metadata={'token_usage': {'completion_tokens': 9, 'prompt_tokens': 8, 'total_tokens': 17}, 'model_name': 'gpt-4o-mini-2024-07-18', 'system_fingerprint': 'fp_48196bc67a', 'finish_reason': 'stop', 'logprobs': None}, id='run-98a5962f-73de-4440-a075-12075ebfe65a-0', usage_metadata={'input_tokens': 8, 'output_tokens': 9, 'total_tokens': 17})

Defining What tools to be used

Now we are going to define the tools that will be used, in this case we will do the same simone biles question about the olympics. So we need to have access to internet, and for that we will use Tavily

from langchain_community.tools.tavily_search import TavilySearchResults

search = TavilySearchResults(max_results=2)

# If you want to test

# search_results = search.invoke("Simone Biles 2024 Paris Olympic games medals count")

# print(search_results)

# If you want to add more tools you can see the available tools in https://python.langchain.com/v0.1/docs/integrations/tools/

tools = [search]

Then we can test the web integration with

# test the tavily search and its response structure

search.run("Simone Biles 2024 Paris Olympic games medals count")

# =>

[{'url': 'https://olympics.com/en/news/simone-biles-all-titles-records-and-medals-complete-list-paris-2024',

'content': "U.S. gymnastics superstar Simone Biles already owns more world and Olympic medals than any gymnast in history. The 27-year-old is the owner of 10 Olympic medals, including seven golds, and 30 world medals - 23 of which are gold. At the Olympic Games Paris 2024, the American has the chance to add up to two medals to her haul, having helped Team USA to gold in the women's final and taking the ..."},

{'url': 'https://www.usatoday.com/story/sports/olympics/2024/07/28/simone-biles-how-many-olympics-appearances/74230944007/',

'content': "The 2024 Paris Olympics are Simone Biles' third Olympic Games and she seems poised to add to her already-impressive medal count. ... Biles has competed in two other Olympic Games: the 2016 Rio ..."}]

Create ReAct AI Agent

For this tutorial we are going to be creating a ReAct AI Agent. It will be able to reason about the data provided and act if necessary to fetch more information. Also we will provide to the Agent a memory of 4 messages so it has context and if needed can use previous searched information to answer new questions.

from langchain.agents import create_react_agent

from langchain.chains.conversation.memory import ConversationBufferWindowMemory

from langchain import hub

from langchain.agents import AgentExecutor

# Creating a memory for the Agent over a Chat interface, so if we call the agent it will remember the last messages

conversational_memory = ConversationBufferWindowMemory(

memory_key='chat_history',

k=4, # Number of messages stored in memory

return_messages=True # Must return the messages in the response.

)

# Load prompt template, this creates a template in which Langchain will format the messages into to sent to the model.

## Must have a langchain key to fetch resources from the langchain hub.

prompt = hub.pull("hwchase17/react-chat") # https://smith.langchain.com/hub/hwchase17/react-chat?organizationId=c005d9c3-9654-5134-8ec9-122b5a609562

agent = create_react_agent(

tools=tools,

llm=llm,

prompt=prompt,

)

# Create an agent executor by passing in the agent and tools

agent_executor = AgentExecutor(agent=agent, # The Base Agent, a ReAct agent

tools=tools, # Set of tools provided to the Agent Executor

verbose=True, # Verbosity to help understand and debug

memory=conversational_memory, # The memory for context in the LLM and history

max_iterations=5, # An Agent can loop itself using different Tools to get a Job done, this limits the max interactions to 5

max_execution_time=600, # During this interactions we can add a timeout during one interaction

handle_parsing_errors=True

)

Now its time to run the question!

agent_executor.invoke({"input": "How many medals Simone Biles got in the 2024 Paris olympics?"})['output']

We will get a view of the thinking process of this Agent printed alongside with the response:

> Entering new AgentExecutor chain...

Thought: Do I need to use a tool? Yes

Action: tavily_search_results_json

Action Input: "Simone Biles medals 2024 Paris Olympics"

[{'url': 'https://people.com/simone-biles-wins-four-medals-2024-olympics-8690143', 'content': "Simone Biles Ends Her 2024 Olympic Games with Four Medals: 'I've Accomplished Way More Than My Wildest Dreams'. Biles finished out her Paris Olympics with a silver medal in the individual floor ..."}, {'url': 'https://olympics.com/en/news/simone-biles-all-titles-records-and-medals-complete-list-paris-2024', 'content': "U.S. gymnastics superstar Simone Biles already owns more world and Olympic medals than any gymnast in history. The 27-year-old is the owner of 10 Olympic medals, including seven golds, and 30 world medals - 23 of which are gold. At the Olympic Games Paris 2024, the American has the chance to add up to two medals to her haul, having helped Team USA to gold in the women's final and taking the ..."}]

Do I need to use a tool? No

Final Answer: Simone Biles won a total of four medals at the 2024 Paris Olympics, including a silver medal in the individual floor exercise and gold medals with Team USA in the women's final.

> Finished chain.

Simone Biles won a total of four medals at the 2024 Paris Olympics, including a silver medal in the individual floor exercise and gold medals with Team USA in the women's final.

As you could see the AI Agent followed the same structured showed initially

If you want to ask more questions you can use the following:

# Here a custom invoker that you can type into to ask!

agent_executor.invoke({"input": input('Message: ')})['output']

LangSmith Tracing

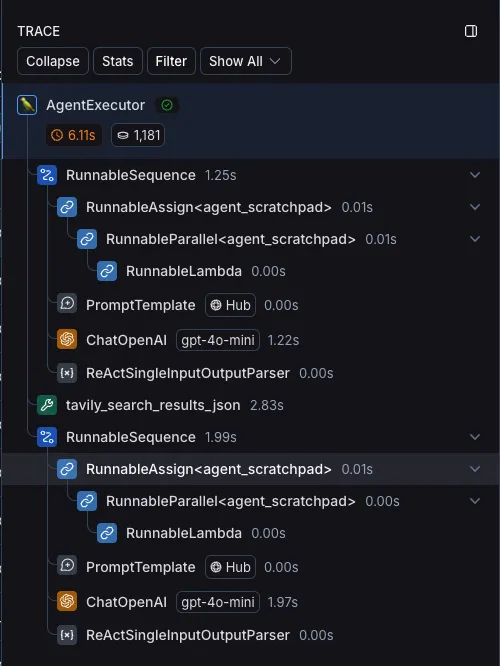

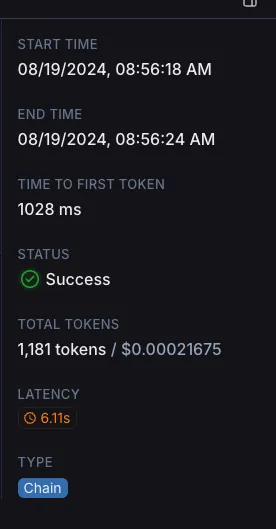

If you look into your lang smith project you will be able to see all the executions and how LangChain thinks in a dashboard. Along with Token usage and the price for each run

In this case it creates a Prompt to Open AI using the Prompt Template then from the response it knows it need to query the web. From there it get the results and send back to the LLM

It also shows the total cost of my operation.

Conclusion

This way you could see how simple it was to create an LLM AI Agent that uses the web to produce valuable information. If you want to explore more I recomend seeing all the tutorials and available tools in LangChain and build your own custom AI Agents!

Feel free to message me at X/Twitter !